|

I am a PhD Student at Hong Kong University of Science and Technology (HKUST), where I work on efficient deep learning (algorithm-hardware co-design) and human-centric computer vision. I am a member of Vision and System Design Lab (VSDL), advised by Prof. Tim Kwang-Ting CHENG. I have conducted a research internship at Microsoft Research Asia (MSRA). Previously, I work with Prof. Zhiqiang Shen from MBZUAI, Prof.Cewu Lu from SJTU, and Prof.Mani Srivastava from UCLA. CV / Google Scholar / Twitter / Zhihu (知乎) / Github |

|

|

[2024-03] I will join Snap Research as a research intern this summer. See you in Santa Monica! [2023-09] One paper accepted by EMNLP 2023 [2023-05] Start my internship at Microsoft Research Asia (MSRA) [2022-05] One paper accepted by ICML 2022 [2021-01] One paper accepted by TPAMI [2020-08] Start my PhD at Hong Kong University of Science and Technology (HKUST) [2020-06] Graduate from Shanghai Jiao Tong University (SJTU) [2020-03] One paper accepted by CVPR 2020 [2019-06] Start my research internship at University of California, Los Angeles (UCLA) [2019-03] One paper accepted by CVPR 2019 |

|

My research interests lie in the general area of artificial intelligence, particularly in efficient deep learning and human-centric computer vision. More concretely, My research interests focus on quantization, algorithm-hardware co-design, human-object interation, and healthcare. Representative papers are highlighted. |

|

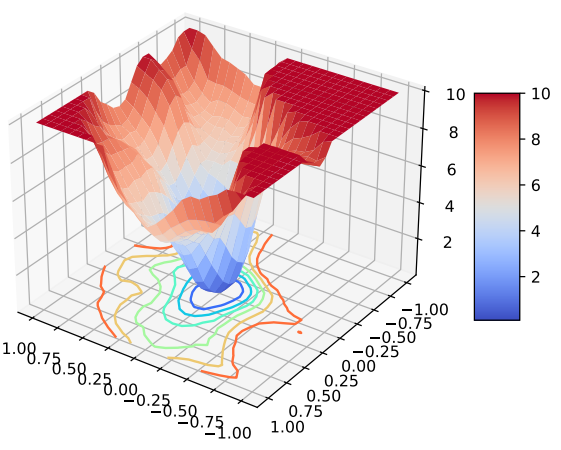

Xijie Huang, Zhiqiang Shen, Shichao Li, Zechun Liu, Xianghong Hu, Jeffry Wicaksana, Eric Xing, Kwang-Ting Cheng ICML, 2022 (Spotlight) Paper / Talk / Slides A novel stochastic quantization framework to learn the optimal mixed precision quantization strategy. |

|

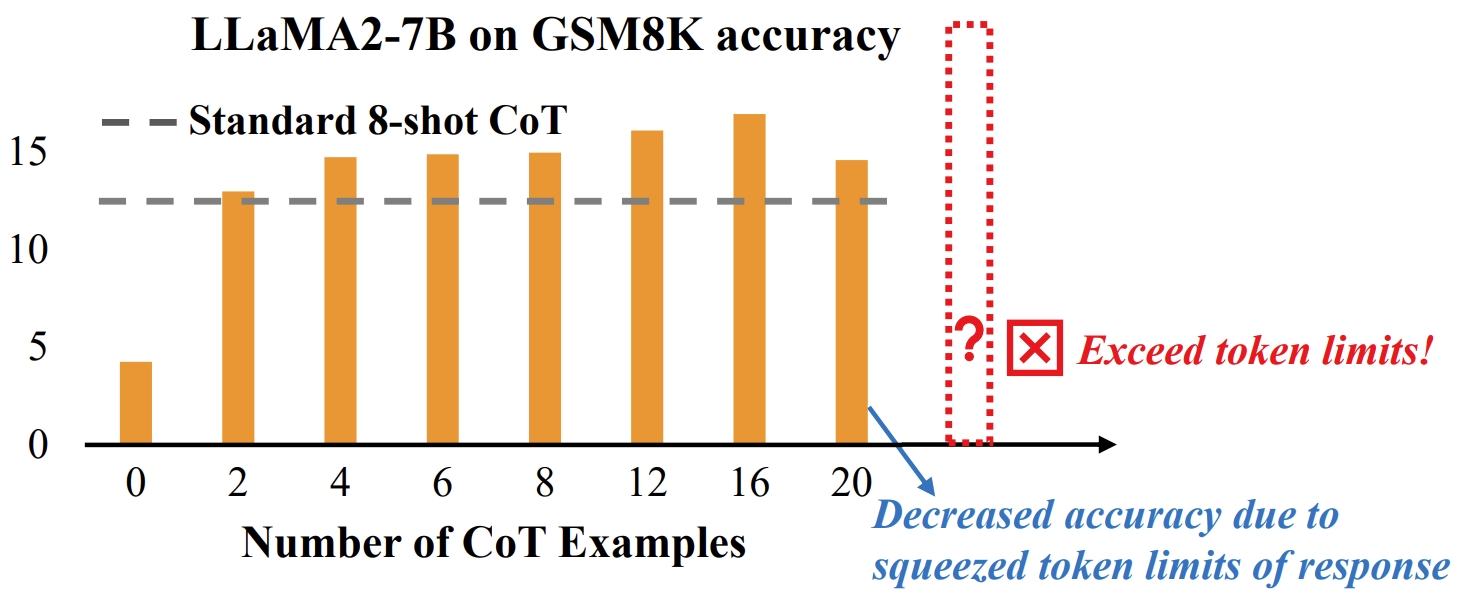

Xijie Huang, Li Lyna Zhang, Kwang-Ting Cheng, Fan Yang, Mao Yang In Submission Paper A novel approach to push the boundaries of few-shot CoT learning to improve LLM math reasoning capabilities. We propose a coarse-to-fine pruner as a plug-and-play module for LLMs, which first identifies crucial CoT examples from a large batch and then further prunes unimportant tokens. |

|

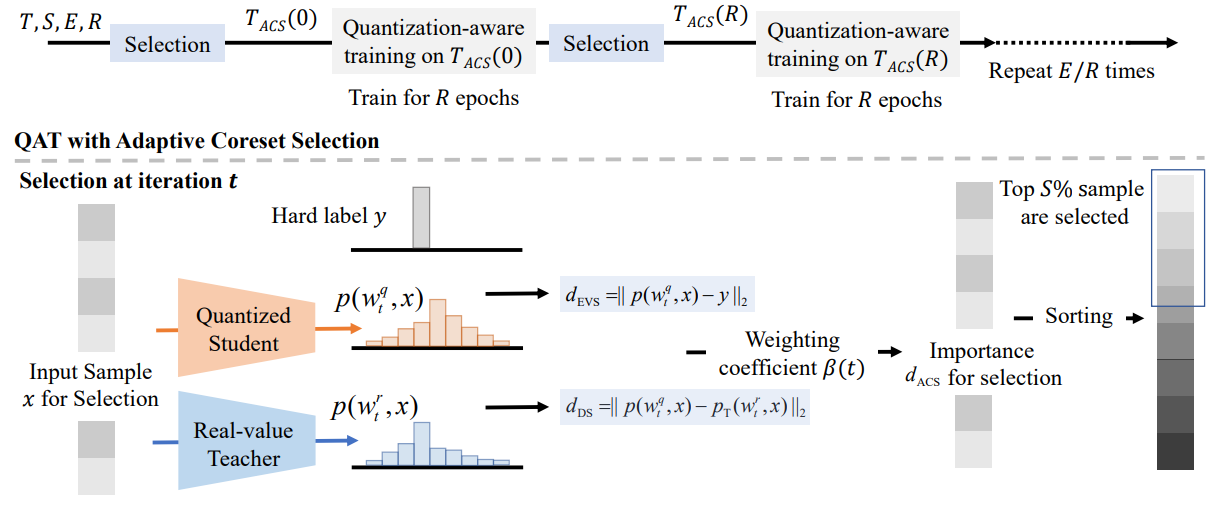

Xijie Huang, Zechun Liu, Shih-Yang Liu, Kwang-Ting Cheng In Submission Paper/Code A new angle through the coreset selection to improve the training efficiency of quantization-aware training. Our method can achieve an accuracy of 68.39% of 4-bit quantized ResNet-18 on the ImageNet-1K dataset with only a 10% subset, which has an absolute gain of 4.24% compared to the previous SoTA. |

|

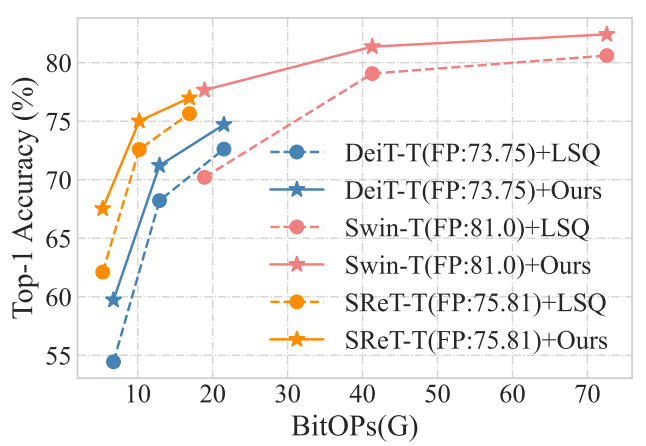

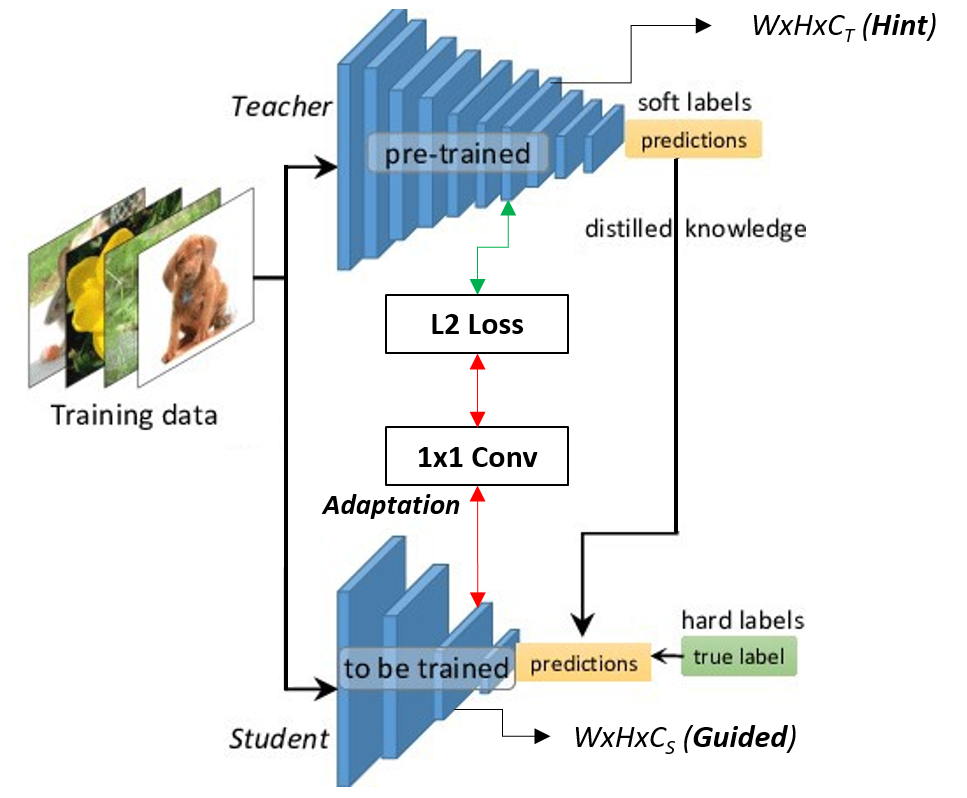

Xijie Huang, Zhiqiang Shen, Kwang-Ting Cheng In Submission Paper/Code An analysis of the underlying difficulty of ViT quantization in the view of variation. A multi-crop knowledge distillation-based quantization method is proposed. |

|

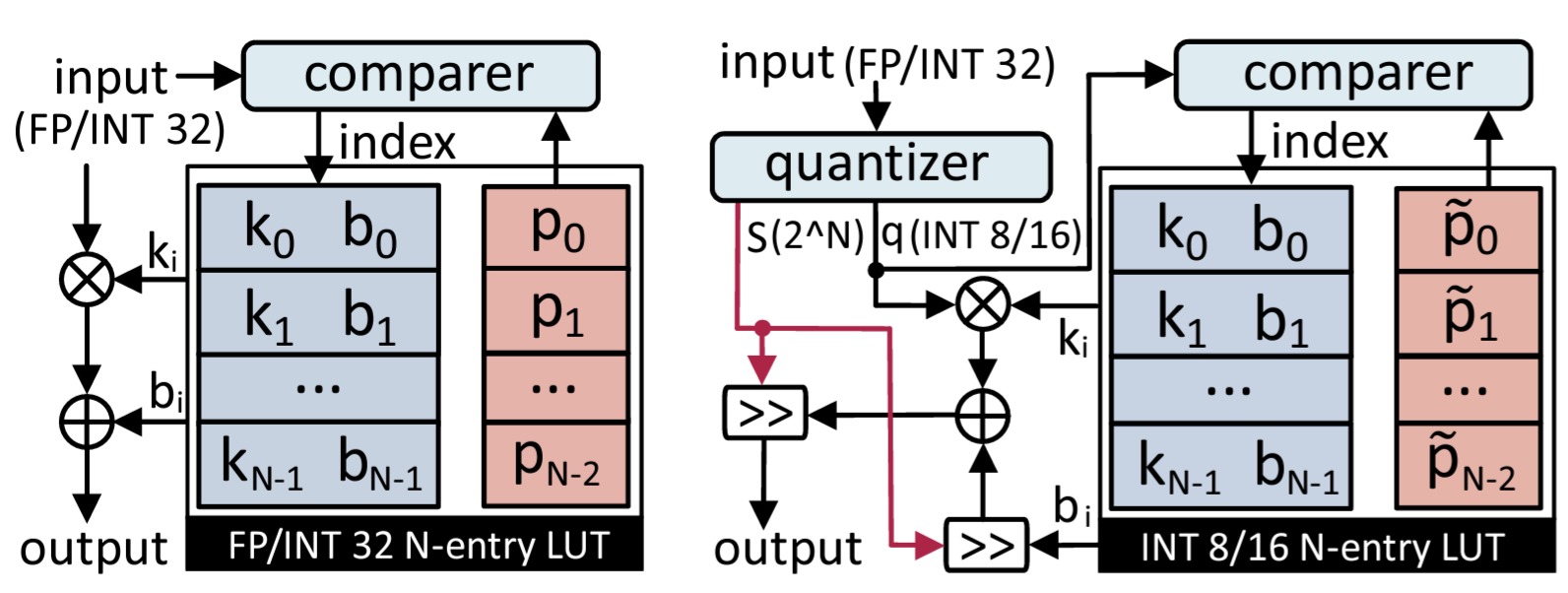

Pingcheng Dong, Yonghao Tan, Dong Zhang, Tianwei Ni, Xuejiao Liu, Yu Liu, Peng Luo, Luhong Liang, Shih-Yang Liu, Xijie Huang, Huaiyu Zhu, Yun Pan, Fengwei An, Kwang-Ting Cheng ACM/IEEE Design Automation Conference (DAC) 2024 Paper/Code A genetic LUT-Approximation algorithm namely GQA-LUT that can automatically determine the parameters with quantization awareness. The results demonstrate that GQA-LUT achieves negligible degradation on the challenging semantic segmentation task for both vanilla and linear Transformer models. Besides, proposed GQA-LUT enables the employment of INT8-based LUT-Approximation that achieves an area savings of 81.3~81.7% and a power reduction of 79.3~80.2% compared to the high-precision FP/INT 32 alternatives. |

|

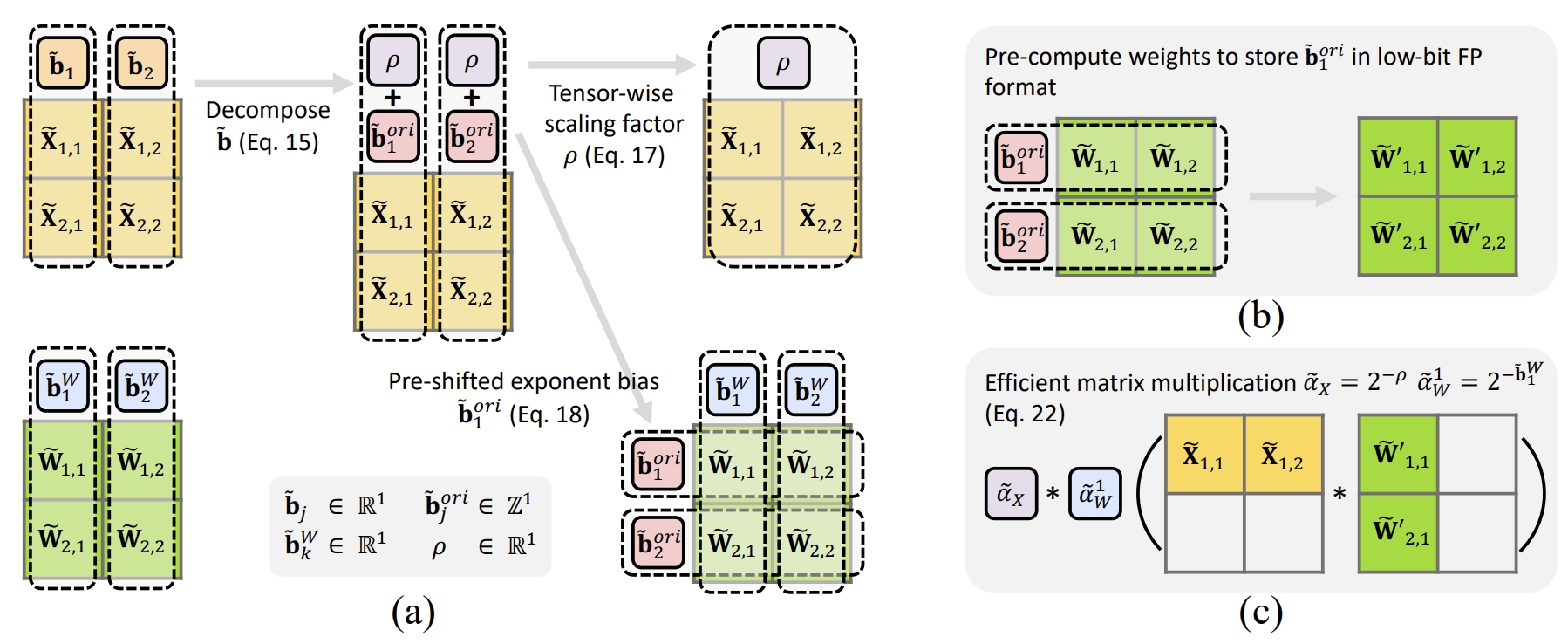

Shih-Yang Liu, Zechun Liu, Xijie Huang, Pingcheng Dong, Kwang-Ting Cheng EMNLP 2023 Paper/Code A per-channel activation quantization scheme with additional scaling factors that can be reparameterized as exponential biases of weights, incurring a negligible cost. Our method can quantize both weights and activations in the Bert model to only 4-bit and achieves an average GLUE score of 80.07, which is only 3.66 lower than the full-precision model, significantly outperforming the previous state-of-the-art method that had a gap of 11.48. |

|

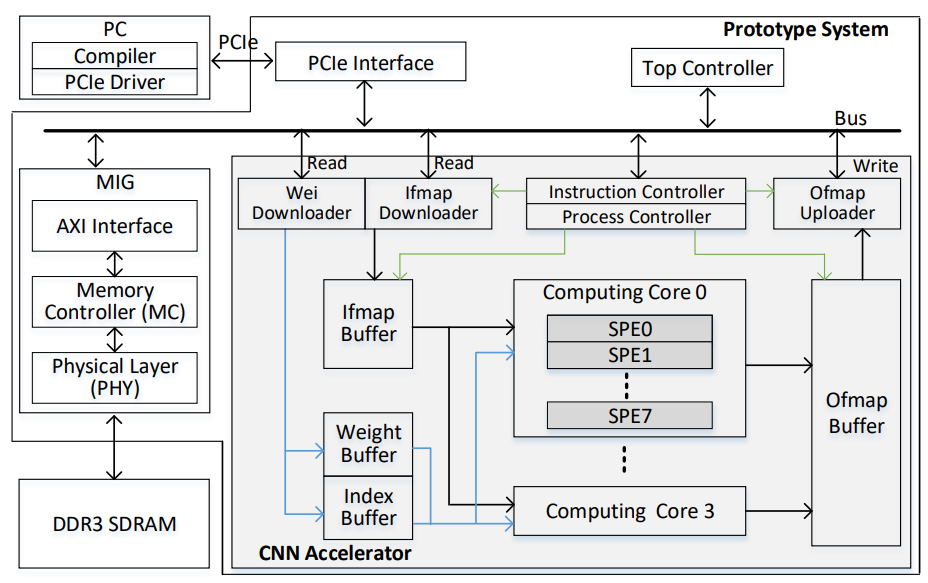

Xianghong Hu, Xuejiao Liu, Yu Liu, Haowei Zhang, Xijie Huang, Xihao Guan, Luhong Liang, Chi Ying Tsui, Xiaomeng Xiong, Kwang-Ting Cheng IEEE Transactions on Circuits and Systems II: Express Briefs (TCAS-II) 2023 Paper A tiny accelerator for mixed-bit sparse CNNs featuring a novel scheme of single vector-based compressed sparse filter (CSF) method and single input multiple output scratch pad (SIMO SPad) to effectively compress weight and fetch the needed input activation. |

|

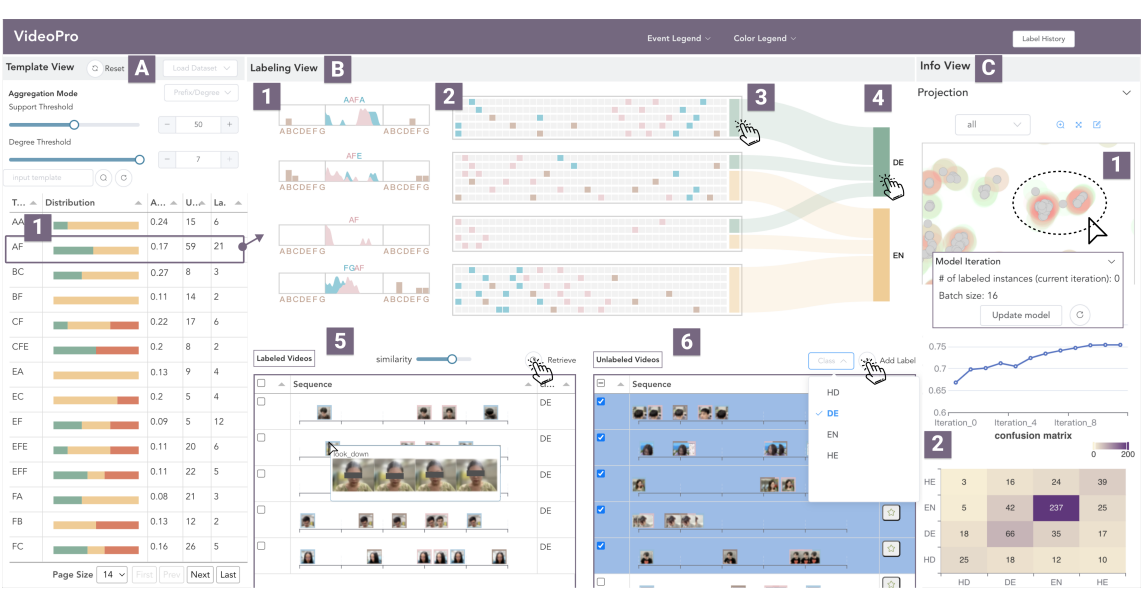

Jianben He, Xingbo Wang, Kam Kwai Wong, Xijie Huang, Changjian Chen, Zixin Chen, Fengjie Wang, Min Zhu, Huamin Qu IEEE Transactions on Visualization and Computer Graphics (VIS) 2023 Paper A visual analytics approach to support flexible and scalable video data programming for model steering with reduced human effort. |

|

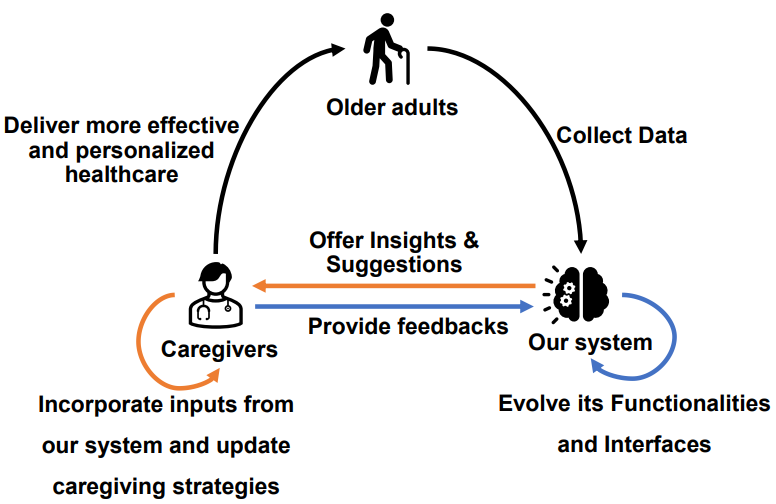

Xijie Huang, Jeffry Wicaksana, Shichao Li, Kwang-Ting Cheng AAAI Workshop on Health Intelligence, 2022 Paper An automatic, vision-based system for monitoring and analyzing the physical and mental well-being of senior citizens. |

|

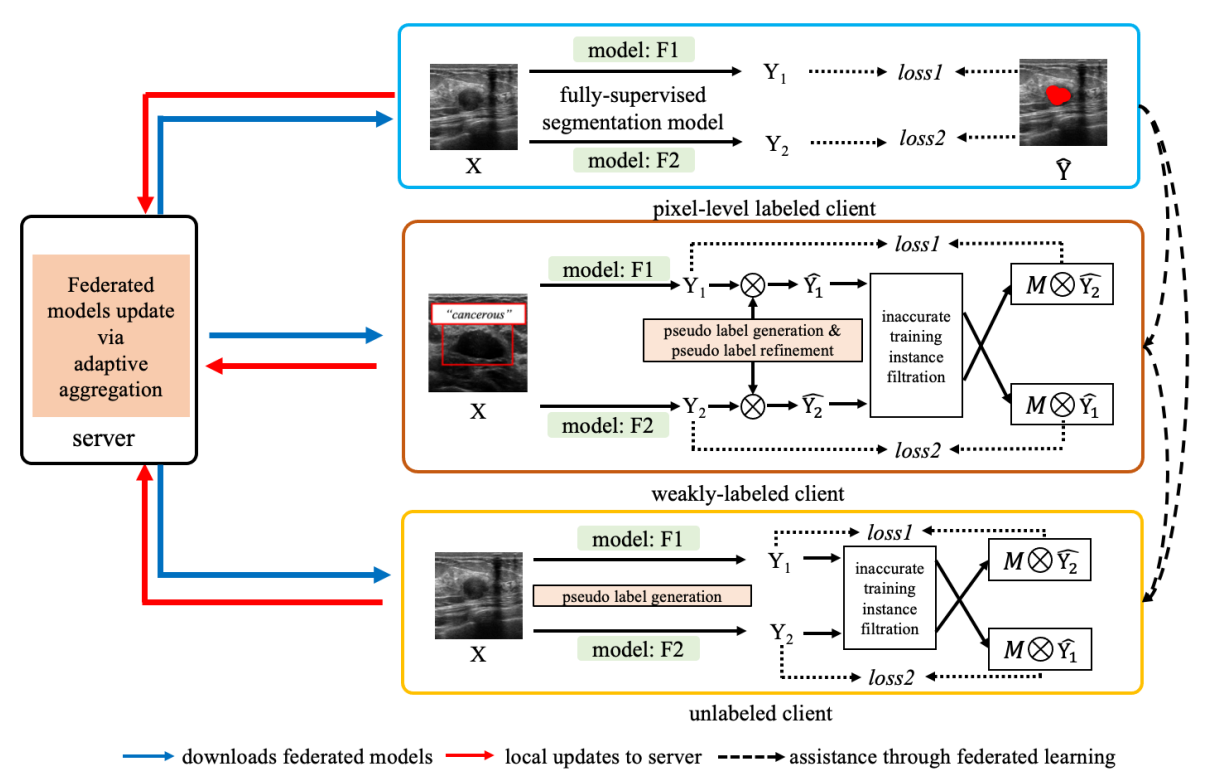

Jeffry Wicaksana, Zengqiang Yan, Dong Zhang, Xijie Huang, Huimin Wu, Xin Yang, Kwang-Ting Cheng IEEE Transactions on Medical Imaging (TMI), 2022 Paper/Code A label-agnostic unified federated learning framework, named FedMix, for medical image segmentation based on mixed image labels. In FedMix, each client updates the federated model by integrating and effectively making use of all available labeled data ranging from strong pixel-level labels, weak bounding box labels, to weakest image-level class labels. |

|

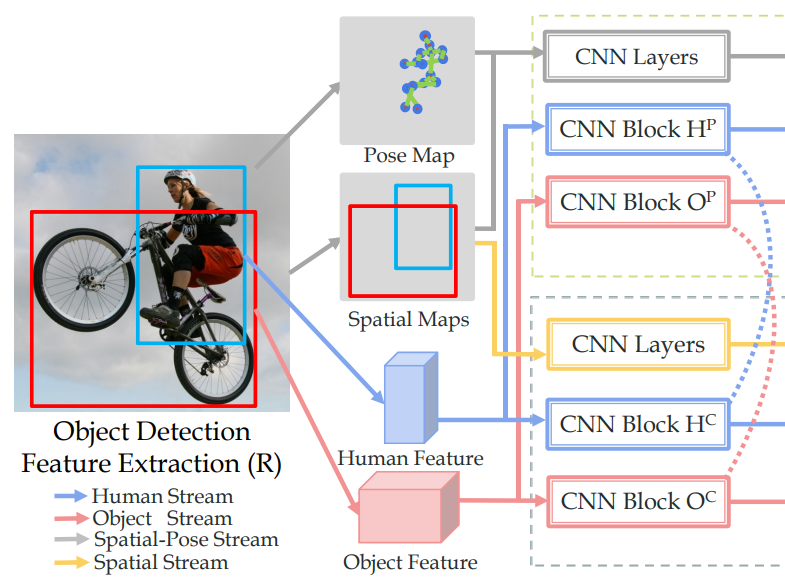

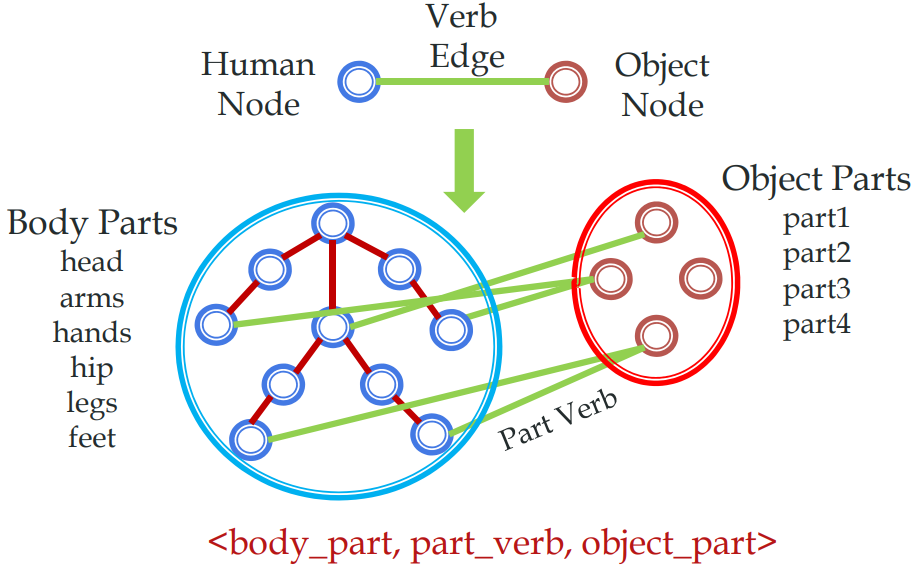

Yong-Lu Li, Siyuan Zhou, Xijie Huang, Liang Xu, Ze Ma, Hao-Shu Fang, Cewu Lu TPAMI 2021/CVPR 2019 Paper (TPAMI version) / Paper (CVPR version) / Code A transferable knowledge learner and can be cooperated with any HOI detection models to achieve desirable results. TIN outperforms state-of-the-art HOI detection results by a great margin, verifying its efficacy and flexibility. |

|

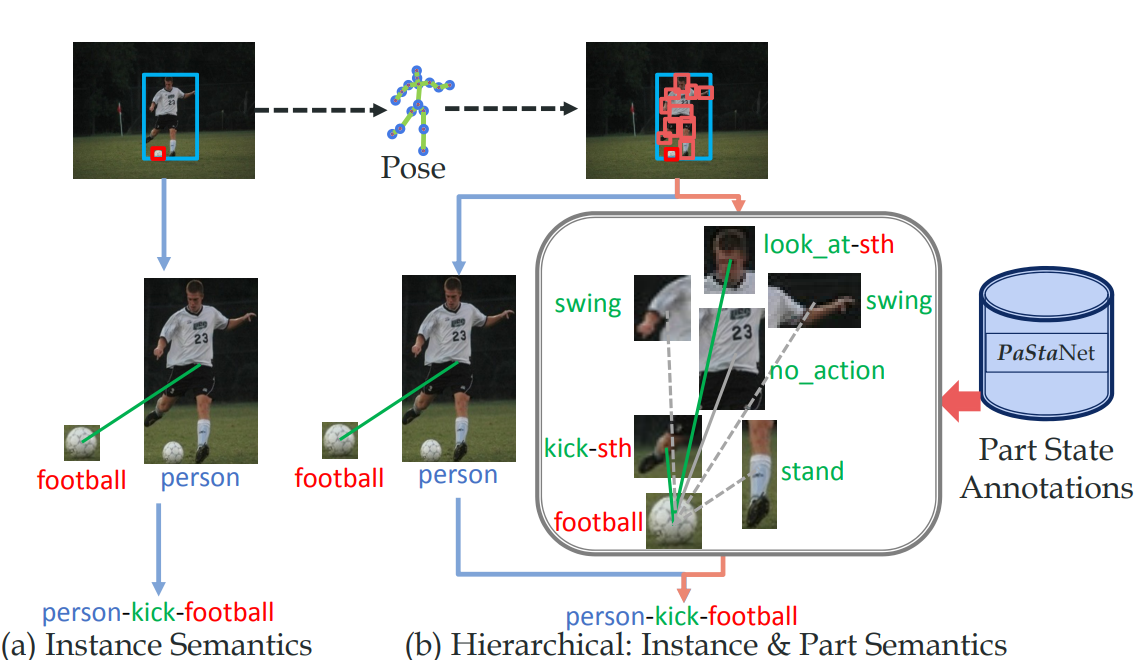

Yong-Lu Li, Liang Xu, Xinpeng Liu, Xijie Huang, Mingyang Chen, Shiyi Wang, Hao-Shu Fang, Cewu Lu CVPR 2020 Paper/ Code A large-scale knowledge base PaStaNet, which contains 7M+ PaSta annotations. And two corresponding models are proposed: first, we design a model named Activity2Vec to extract PaSta features, which aim to be general representations for various activities. |

|

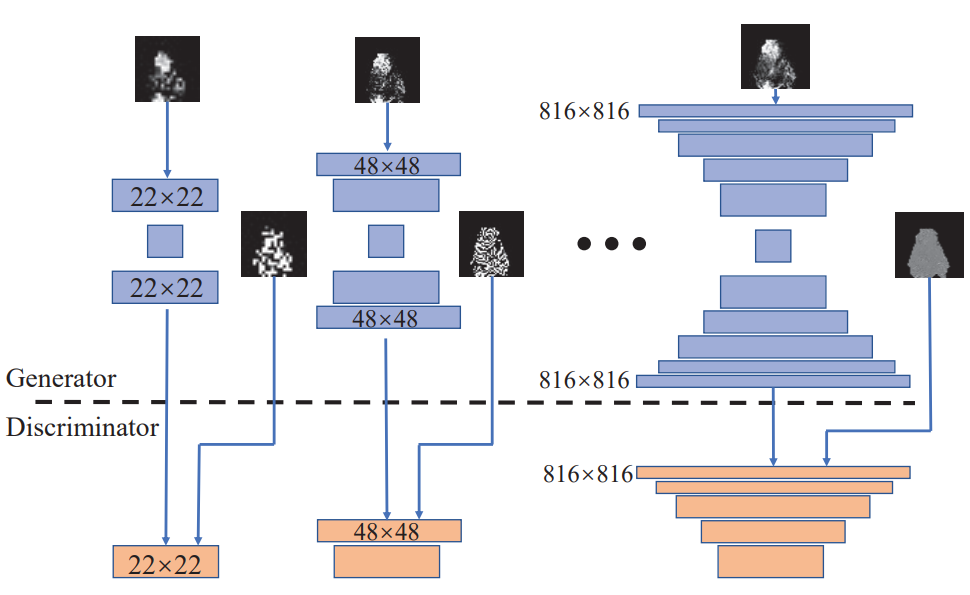

Xijie Huang, Peng Qian, Manhua Liu CVPRW 2020 Paper A latent fingerprint enhancement method based on the progressive generative adversarial network (GAN). |

|

Yong-Lu Li, Liang Xu, Xinpeng Liu, Xijie Huang, Ze Ma, Hao-Shu Fang, Cewu Lu Preprint Paper/ Project webpage A large-scale Human Activity Knowledge Engine (HAKE) based on the human body part states to promote the activity understanding. |

|

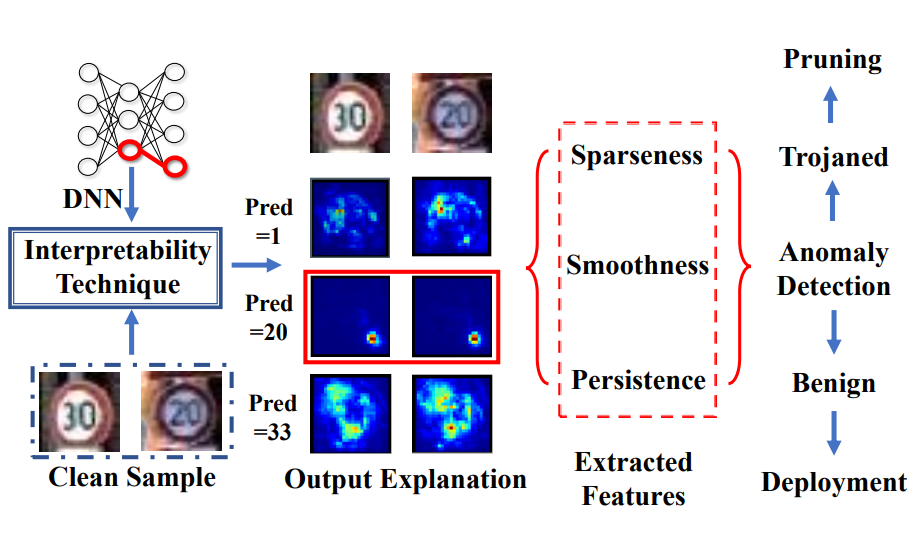

Xijie Huang, Moustafa Alzantot, Mani.Srivastava Preprint Paper A framework to detect trojan backdoors in DNNs via output explanation techniques |

|

|

|

Reviewer, ICLR 2024, ACM MM 2024, EMNLP 2023, NeurIPS 2023, ICCV 2023, CVPR 2023, WACV 2022-2023, AAAI 2022-2024, ECCV 2022/2024

Top 10% Reviewer, ICML 2022 Program Committee, ICCV 2023 Workshop on Low-Bit Quantized Neural Networks Technical Program Committee, ICCV 2023 Workshop on Resource Efficient Deep Learning for Computer Vision |

|

COMP 2211 (Exploring Artificial Intelligence), Lecture: Professor Desmond Tsoi

COMP 5421 (Computer Vision), Lecture: Professor Dan Xu COMP 1021 (Introduction to Computer Science), Lecturer: Professor David Rossitor |

|

National Scholarship (Top 2% students in SJTU), 2017

A Class Scholarship (Top 2% students in SJTU), 2017 CSST Scholarship (USD $5,343 from UCLA), 2019 RongChang Academic Scholarship (Top 20 in SJTU), 2019 RedBird Scholarship, Postgraduate Studentship, 2020-2022 AAAI-22 Student Scholarship, 2022 |

|

Thanks to Barron's website template . |